HDR System with Frequency Guided Fusion

- Timofey Uvarov

- Jan 8

- 2 min read

Updated: Jan 17

Patent describing the system:

An HDR quarter-resolution image captured using a sensor with variable pixel exposure was processed through an HDR ISP pipeline, upscaled to full resolution, and further enhanced by overlaying high-frequency components from a full-resolution image sequentially captured using the same sensor configured to linear mode. The HDR image was merged on the sensor, tone-mapped in ISP, and aligned and fused with a non-HDR (aka LDR) image on a mobile GPU.

The image sensor has a Bayer pattern and a variable exposure pattern. Each of the 4 blocks of 2x2 size inside a larger 4x4 block was assigned different integration times and combined using the exposure merge algorithm on the sensor.

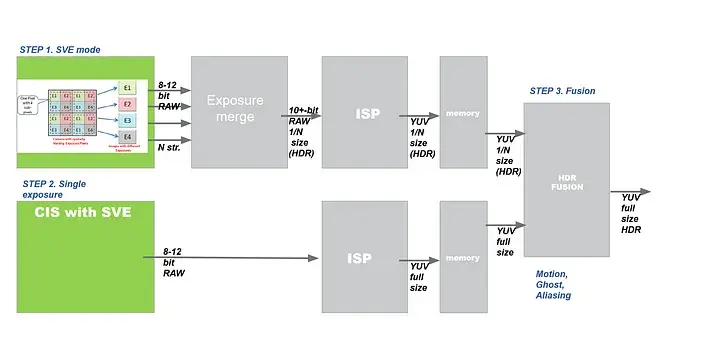

Step 1 of the diagram above shows the acquisition process of an HDR image with a sensor with 4 spatially varying exposures. After merging, the HDR image was processed, tone-mapped in ISP, and saved to memory.

Since, in the merging stage, four 2x2 blocks are combined into a single 2x2 block, a lot of spatial resolution is lost, and aliasing appears.

In step 2, a computational photography approach was introduced to restore the resolution of the HDR image. The sensor pixels were integrated with the same exposure time, and the full-resolution image was processed through the ISP and also saved to memory for further fusion with the HDR image.

The fusion method calculates the high-frequency component of the LDR full-resolution image and renders it above the up-scaled LDR image.

The exposure merge was done on the sensor chip, and fusion was done on the GPU.

It is also possible to do an exposure merge on the ISP chip, but this will increase the data throughput between the sensor and ISP by 4x.

The system showed remarkable results, overperforming iPhone and Pixel phones of the same generation on both dynamic range and detail reproduction. It also featured no ghost artifacts when the background of the moving object was oversaturated in one or more of the exposures.

I presented the system demo on posters and live sessions at EI2017 and CES2017.

The Electronic Imaging Committee put the invention on the same page as Boyd Fowler’s and Brian Cabral’s talks!

demo session setup, live demonstration

the invention generated attention for our partners at OmniVision

result

The concept was developed by the OmniVision Singapore team in collaboration with a research institute, involving cross-team collaboration and efforts from teams in the US and China.

Alignment of the tone-mapped and linear images was done on GPU and was developed by the OmniVision team in Shanghai.

Speed of ransac was reasonably increased by adding constraints on the set of control points and removing outliers based on user experience and memory limitations. The method is described in the following invention:

Management of ghosting artifacts was greatly improved by OVT US team.